Supply chain software is actively transforming by shifting its focus from generating data-driven insights to enabling semi-autonomous and intelligent operations. This fundamental change is driven by the steady advancement of artificial intelligence, evolving from foundational statistical models through advanced generative AI, which has led to the rise of AI agents that promise to reshape logistics.

At 25madison, we are building and investing in tailor-made software that radically improves operations and generates new value. We're partnering with a select group of supply chain and industrial leaders to identify opportunities, test, build, and invest in solutions specifically designed for today’s industry challenges.

Traditional statistical models have created the foundation for the modern-day supply chain

To truly appreciate the leap to AI agents, it's essential to first acknowledge the foundational role of traditional statistical models. For decades, interpreting data for informed decision-making has always been central to supply chains. From predicting inventory levels and managing forecasts to route optimization, supply chain managers constantly have sought to leverage increasingly advanced capabilities for accurate forecasting. Even before the launch of new generative AI applications, it’s estimated that traditional AI use cases, such as accurate demand forecasting and improved inventory management, enabled “early adopters to improve logistics costs by 15%, inventory levels by 35%, and service levels by 65%,” according to a report from McKinsey in 2021.

To leverage data at scale, enterprises have historically employed techniques such as regression models, Hadoop/Spark, and advanced statistical algorithms. Leading software vendors in supply chain, logistics, and warehouse management have incorporated these methods into their applications, enabling their customers to surface insights. Visibility platforms and digital twins have moved beyond point solutions to become comprehensive tools that analyze data across the supply chain. According to an IDC blog, 70% of companies in 2020 focused on improving their supply chain visibility. These capabilities are especially critical when global networks face disruptions like the COVID-19 pandemic, workforce shortages, or tariff-driven shifts in supply chains.

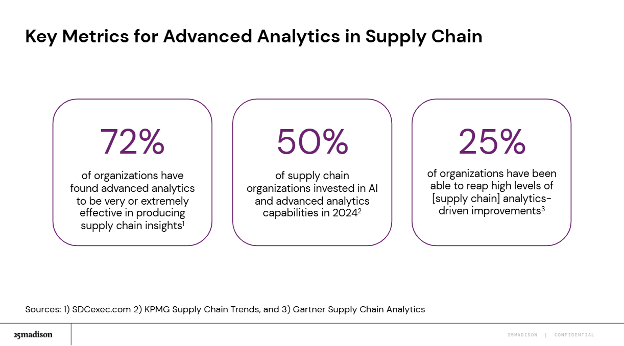

Even though there’s investment into these capabilities, what’s shocking is that only a small segment of firms (25%) have found high levels of improvements from supply chain analytics. Compared to more embedded processes like demand forecasting, general supply chain analytics frequently require a user to manually dive into the data to find something actionable. This highlights a persistent challenge for most organizations in effectively translating advanced analytical insights into concrete operational enhancements.

Generative AI enables new use cases for exploring and understanding supply chain data

Building upon this foundation, generative AI introduced a new paradigm, extending “artificial intelligence’s” capabilities beyond prediction to natural language understanding. Large language models offer the capability to ingest large amounts of unstructured textual data, an advancement necessary to capture insights from where supply chain data actually lives, within procurement records, contracts, and various other documents. Rather than replacing traditional statistical models, which are still necessary for generating accurate predictions, LLM’s represent a valuable complementary capability for gaining richer supply chain insights.

When GenAI burst onto the scene in 2023, there was a ton of momentum and conversations around potential use cases across industries and functions. In the C-Suite, AI introduced additional conversations for traceability, data security, and responsibility. According to an article from BCG in 2023, “more than 50% of executives are deeply worried about incorporating it [GenAI] into their operations.”

To address issues like traceability and enhance domain specificity, frameworks such as Retrieval Augmented Generation (RAG) and libraries like LangChain quickly emerged. Given that older GenAI models typically had smaller context windows, RAG was also useful for managing and limiting the amount of context fed into a prompt. For example, OpenAI’s GPT 3.5 Turbo only offered a 16K token context window (where a token is a basic unit of text an LLM uses for processing), compared to today's GPT 4.1 with a 1M token context window. By selectively retrieving data for the LLM to process, RAG ensures that only the most relevant information enters the context window, efficiently managing memory limitations and generally leading to lower costs.

In the supply chain domain, most early GenAI use cases centered around creating intelligent chatbots or co-pilots that could interact with existing supply chain data. A common example involved connecting an SCM database to a GenAI model via RAG, enabling users to explore data through natural language questions. Another early application was analyzing contracts for sourcing workflows, where vendor-specific details and artifacts were uploaded into the model's context. Enterprises adopted these use cases by exploring foundational models from major players like OpenAI, Google, or Anthropic, collaborating with new domain-specific startups, or leveraging upgraded versions of their already-implemented SCM software.

AI agents pave the path forward towards a more autonomous supply chain

While both traditional analytics and generative AI have transformed how we gain insights from data, a critical gap persists: converting these insights directly into action. This is precisely where AI agents emerge as a game changer, paving the path towards an autonomous (or semi-autonomous with humans in the loop) supply chain.

Newer frameworks, like the Model Context Protocol (MCP) from Anthropic, were introduced to “connect AI assistants to the systems where data lives.” This has opened the door for standardization, as agents can leverage MCP servers to access data, context, and functions across the enterprise. New use cases are now emerging that combine the precision of traditional statistical models with the flexibility of generative AI, pushing the boundaries of what’s possible. Let’s walk through an example:

%20Artificial%20Intelligence%20in%20Supply%20Chain.png)

This illustration presents an agentic AI workflow specifically designed for demand forecasting, where the AI is actively reasoning through the necessary tasks. Activated by either a direct user prompt or an external event, such as a new news impacting market demand, the agent intelligently interprets the request. It can then choose to invoke a specific demand forecasting statistical model, record the resulting forecast in a database, and retrieve relevant business context to add explainability. A human verification step provides an oversight layer. The AI agent could integrate with other existing applications, sharing the updated forecasting figures across the entire operational landscape.

To deliver on this value proposition for brands, 25madison-backed EverX utilizes headless agents – AI entities that operate autonomously in the background. These agents are designed to accurately forecast demand by considering seasonality and other key variables, and then integrate stock level data to guide optimal inventory and purchasing decisions.

As we move forward towards more autonomous systems, the importance of defined human-in-the-loop workflows grows exponentially. A blogpost from AWS from April 2025 says that “human involvement helps establish ground truth, validates agent responses before they go live, and enables continuous learning through feedback loops. ”In our example use case, having the agent verify the updated forecast would be a safeguard before updating other applications. Having verification and accountability behind these agents enables sound business decisions.

What happens next?

The undeniable momentum surrounding AI agents signals a pivotal moment for supply chain automation. However, emerging startups and established players need to prove that they are providing transformative value, rather than just simply automating for automation’s sake. The success of agents is determined by their consistency and reliability. For high-impact applications, issues like hallucinations or errors would make these systems unusable, even with a human-in-the-loop process. An employee’s time should not be focused on just fixing errors that an AI agent made.

Looking ahead, there will be an added emphasis on the management and security of AI agents and their data. It will continue to be necessary to identify and control the types of data shared between businesses and AI software vendors. Once individual agents prove their value, orchestrating multiple agents for complex workflows (agents controlling other agents?) could unlock greater efficiencies.

Our team at 25madison remains dedicated to researching, surfacing, and investing in potential opportunities at the intersection of supply chain and AI. If you are interested in connecting as a founder, co-investor, industry expert, or design partner, please feel free to reach out to a member of our team.

Please note the information published is not intended to be investment advice.

Written By Rohan Johar

MBA Candidate, Yale School of Management, Class of 2026

Rohan brings extensive experience in enterprise technology, having most recently served as an AI Specialist at Google, where he led strategic initiatives in generative AI adoption for large-scale clients. He previously held roles at Oracle focused on cloud architecture and data analytics. Rohan holds a B.A. in Computer Science from Boston University.